Share

FraudGPT and GenAI: How will fraudsters use AI next?

Aug 6, 2025

Since ChatGPT’s mainstream debut in 2022, generative artificial intelligence (GenAI) has surged in use, with black hat tools like FraudGPT and WormGPT making their mark on the threat landscape.

FraudGPT is just one of many generative AI tools accessible to fraudsters online. These bad actors are leveraging the same AI chatbots you use to draft an email to your boss to automate phishing campaigns and reproduce systemic attacks at scale. This flavor of “DIY fraud” is giving consumers and small businesses a run for their money, with financial institutions and fintechs eating much of the costs.

In this blog post, we’ll take a look at the technology behind AI-driven fraud trends and break down what it means for fraud detection. We’ll discuss how financial institutions and fintechs can enhance threat detection to combat vulnerabilities stoked by malicious AI.

What is FraudGPT?

FraudGPT is a subscription-based large language model (LLM) designed to function like OpenAI’s ChatGPT or Microsoft Copilot — but without any built-in safety guardrails. It first made headlines in 2023 for its ability to generate content specifically intended for fraud, including fake bank statements, pay stubs, and utility bills. The tool has been marketed for its ability to help fraudsters program malware, find credit card types that don't require extra security verification (non-VBV bins), identify vulnerable websites, and generate convincing phishing pages.

Source: FlowGPT, an AI marketplace considered the “Wild West” of GenAI apps

What about WormGPT?

Like FraudGPT, WormGPT came into the public eye in 2023. Built on top of the 2021 GPT-J model developed by EleutherAI, WormGPT was trained on malware code, phishing templates, and compromised emails to create a chatbot without ethical restrictions.

Accessible from Telegram and Discord, WormGPT has been marketed for its ability to generate sophisticated Business Email Compromise (BEC) attacks, automating the creation of targeted phishing campaigns that can adapt to different industries and corporate communication styles. Its session memory and formatting capabilities aid in the development of malicious code and malware.

Other threats: Xanthorox AI

Xanthorox AI is an AI tool that brands itself as the “Killer of WormGPT and all EvilGPT variants.” First surfaced in April of 2025, Xanathrox markets its product “Xenware” as the first AI-generated advanced-featured ransomware capable of bypassing traditional security measures. The tool's modular architecture enables code generation, vulnerability exploitation, and data analysis — all while integrating voice and image processing capabilities for more sophisticated, automated attacks.

Unlike FraudGPT and WormGPT, which rely on existing language models, Xanthorox uses custom-built LLMs leveraging APIs to gather real-time information from over 50 search engines, providing fraudsters with access to current data for their schemes. It offers users complete data containment, eliminating the digital footprints that might otherwise help security teams trace fraudulent activities back to their source.

How are fraudsters using new AI tools?

Each enterprise has its own preferences and use cases for AI, and fraud is no different. Here are some of the most prevalent AI use cases that fall within the umbrella of financial fraud:

Social engineering scams

Social engineering scams rely on manipulating people, not systems. Instead of breaking through technical defenses, attackers trick individuals into handing over sensitive information or performing risky actions. By impersonating trusted individuals or organizations, fraudsters exploit human instincts like urgency, fear, or helpfulness to gain access.

The type of social engineering scam often depends on the communication channel used. For example:

- Phishing involves impersonating a trusted person or business over email

- Smishing uses text messages to carry out similar impersonation

- Vishing takes place through voice calls or voicemails

- Deepfake scams use AI-generated videos to mimic real people with alarming accuracy

Instead of casting a wide net (as is typical of phishing attacks), fraudsters are increasingly turning to “spear phishing” — a more zoomed-in approach that leverages personal details specific to each target.

Using GenAI, fraudsters can analyze and replicate written language, speech patterns, or even facial characteristics for more effective, targeted attacks. They can rapidly sort through data that has been scraped from the internet or stolen from private channels like email to discern which details will help make these impersonations more convincing.

Synthetic identity creation

AI has advanced the creation of synthetic identities — convincing personas created by synthesizing real personally identifiable information (PII) such as names, addresses, and social security numbers, and combining them with fictional data.

Document forgery and document deepfakes

A necessary component of synthetic identity fraud and other financial scams, document forgery has become significantly more dangerous in the era of generative AI. Today’s fraudsters can use advanced models to rapidly fabricate or subtly manipulate sensitive documents used in identity verification, loan applications, and other onboarding workflows.

Machine learning models and image generators can now be trained to:

- Mimic real documents: These tools replicate fonts, layouts, logos, and even security features with shocking accuracy. AI models can simulate realistic lighting, texture, and photo composition, making fake documents nearly indistinguishable from legitimate ones.

- Modify real documents: Slight tweaks, such as inflating a bank balance, adjusting a pay period, or changing an address, can be automated and mass-produced with generative AI. These micro-forgeries are harder to detect because they often preserve most of the document’s original layout and metadata.

In either case, a forged document can bypass legacy verification systems if those systems rely only on visual inspection or static validation rules.

Deepfakes are coming for documents, too

Most people associate the term deepfake with video or voice manipulation. But in 2025, document deepfakes are on the rise, and they’re just as dangerous. These are AI-generated documents that don’t just look like the real thing; they are synthesized from scratch using cutting-edge image-generation models, often with no reference to a real document at all.

Source: Inscribe AI's in-house synthetic document dataset (2025)

Unlike traditional forgeries, document deepfakes are:

- Generated pixel by pixel using autoregressive models, rather than edited versions of real images.

- Photorealistic at a glance, with textures like paper folds, lighting inconsistencies, and convincing shadows that trick even trained reviewers.

- Prone to subtle errors, such as misspelled words, incorrect locations (e.g., fake store names), or odd formatting that AI tools like Inscribe can flag — but these issues are quickly disappearing as models evolve.

According to Inscribe’s research on GenAI documents, utility bills, invoices, and bank statements make up 70% of AI-generated document fraud attempts, and their realism is increasing. What makes these deepfakes especially dangerous is their accessibility: anyone with the right prompt and access to an open-source image model can generate a fake pay stub or lease agreement in seconds.

Source: Inscribe

The emergence of document deepfakes changes the question from “Does this look fake?” to “Can this document prove it’s real?” This new reality requires advanced detection systems that combine forensic visual analysis, metadata validation, and semantic coherence checks, all of which risk leaders should look for when selecting a document fraud detection vendor.

Credential stuffing attacks

Credential stuffing attacks are a type of brute force attack where fraudsters commit account takeover (ATO) fraud by obtaining users’ credentials for one account and using them to attempt to break into other unrelated accounts.

As data breaches become more common, credential-stuffing attacks have increased too. AI-powered bots have exacerbated credential stuffing attacks because they allow fraudsters to automate the process of testing large volumes of usernames and passwords across multiple accounts, ultimately increasing the success rate of getting into accounts.

For example, a fraudster might obtain a user’s email address and password for an Amazon account through a data breach and then use that same information to try to log in to different bank accounts in hopes that the user has the same username and password for other accounts.

How powerful and accessible are malicious AI models?

LLMs are only as powerful as the data they’re trained on, and many cybersecurity experts question the legitimacy of these tools’ claims. In some cases, they’re designed to exploit aspiring hackers who lack the skills to build their own malware or other hacking tools. As Tanium’s Melissa Bischoping puts it, “The real scam is the fact that someone out there is trying to sell this as a wonder tool.”

But FraudGPT and WormGPT themselves have become more of a label than a specific AI tool designed to empower cybercriminals to carry out cyberattacks. Although the originals were taken down, hundreds of uncensored LLMs exist today, many of them leaning into the WormGPT branding for the purpose of name recognition and searchability. And they’re not just on dark web marketplaces; they’re available on common developer sites like GitHub and AI marketplaces.

Quantifying risk: Stats for understanding the GenAI threat in 2025

So how much real-world damage are these tools causing? It depends who you ask, but the data offers a clearer picture of how GenAI is reshaping fraud and cybercrime:

- 42.5% of fraud attempts against the financial sector are due to AI, according to Signicat

- Deepfake attacks have grown 2,137% since ChatGPT’s 2022 launch — from just 0.1% of fraud attempts to 6.5%

- Deepfakes make up roughly 1 in 15 fraud cases

- A deepfake attempt occurred every five minutes in 2024, according to Entrust

- Digital document forgeries increased 244% year-over-year

- The number of malicious emails has surged by 4,151% since 2022, according to SlashNext, with phishing volume up by 856% year-over-year

Source: SlashNext

- In a simulation by SoSafe, 78% of participants opened phishing emails that were generated by AI. One in five (21%) proceeded to click on potentially harmful links or attachments within the email.

- 97% of organizations report challenges with identity verification — and over half (54%) believe AI will make identity fraud even harder to control.

For more insight into how fraud is changing, download Alloy’s 2025 Fraud Report

How can financial institutions and fintechs combat AI-driven fraud attacks?

Financial services is the most targeted industry for fraud attempts. As fraudsters continue to exploit AI for financial gain, financial organizations must adapt with equally advanced technologies to protect against these threats.

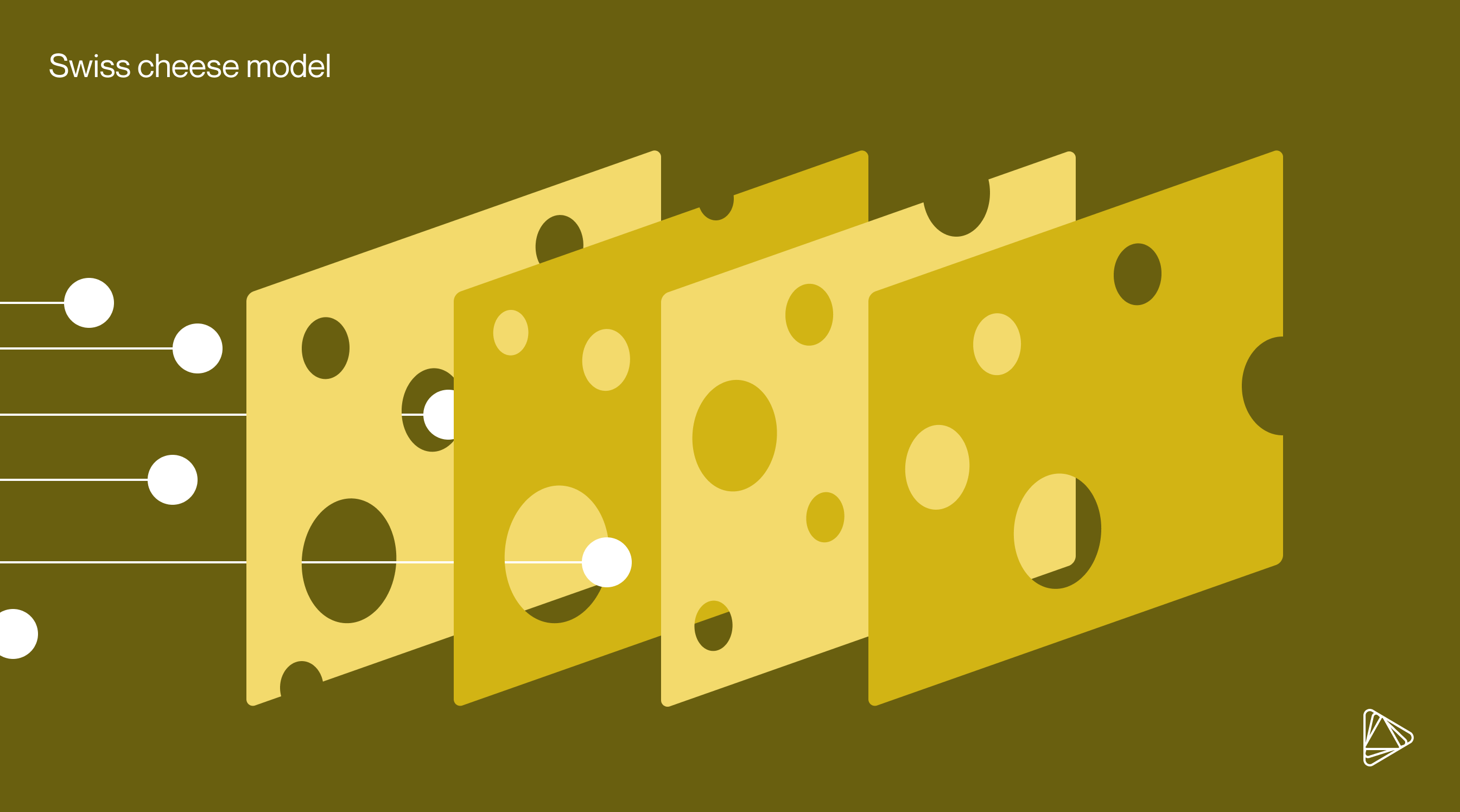

Risk experts recommend the “Swiss Cheese Model” as a useful framework for understanding how to mitigate AI-powered fraud. This approach conceptualizes the multiple layers of defense that prevent fraud from occurring, using Swiss cheese as the visual. Each layer has potential weaknesses or "holes," unique to its configuration. But when fraud prevention defenses are stacked like Swiss cheese slices, the likelihood of a threat passing through all layers diminishes significantly.

Core components of an effective Swiss Cheese Model for detecting AI-driven fraud include:

Data orchestration

Financial institutions and fintechs should use a variety of data vendors to cross-verify information and detect inconsistencies. Examples include identity verification services, credit bureaus, and social media analysis. By combining data from different vendors, financial institutions and fintechs can improve their ability to spot suspicious patterns that single data sources might miss.

Learn more about data orchestration here

Real-time monitoring and machine learning models

Machine learning models are crucial for detecting patterns and anomalies that indicate fraudulent activity. These models can analyze vast amounts of transaction data to identify behaviors that deviate from the norm. Because they continuously learn from new data, machine learning models can adapt to evolving fraud tactics and improve their accuracy over time. Financial institutions should deploy these models to monitor transactions in real-time, flagging suspicious activities for further investigation.

How to use data and machine learning in fraud prevention

Document fraud detection

Advanced document fraud detection is essential for identifying counterfeit documents. These tools use machine learning and AI to analyze images and documents for signs of tampering, inconsistencies, and forgeries. By examining elements such as font mismatches, irregularities in image quality, and discrepancies in document metadata, these systems can detect fraudulent documents more accurately than manual reviews. Financial institutions and fintechs should integrate these tools into their onboarding and underwriting verification processes to enhance security and efficiency.

Learn more about document fraud detection here

Step-up verification

Step-up verification involves increasing the level of security for applications or transactions that are deemed high-risk. This approach requires users to provide additional verification when they perform activities that fall outside their usual behavior, such as large transactions or access from unfamiliar devices.

Historically, many organizations relied on one-time passwords and additional security questions because they were easy to use. However, organizations can just as easily leverage more sophisticated step-up methods, such as document verification and biometric verification, with Alloy’s SDK. By implementing step-up verification, financial institutions and fintechs can add an extra layer of protection for sensitive actions without inconveniencing users for routine transactions.

Seamlessly add step-up verification methods with Alloy’s codeless SDK

Actionable AI tools

Alloy’s Fraud Attack Radar provides more than just alerts — it delivers actionable insights to help clients quickly triage threats, adjust fraud policies, and respond with confidence. By continuously learning from data across the customer lifecycle, Fraud Attack Radar enables clients to assess risk at the portfolio level and stay ahead of emerging fraud patterns.

Learn more about Fraud Attack Radar here

AI Agents

AI agents can be employed to enhance fraud detection and response efforts. These agents can monitor transactions, flag suspicious behavior, and even interact with customers to verify their identities or gather additional information. AI agents can work around the clock, providing continuous surveillance and rapid responses to potential threats. Integrating AI agents into customer service and fraud prevention teams can help financial institutions address issues promptly and efficiently.

The tools to fight AI-driven fraud already exist

AI-driven fraud presents a formidable challenge, but financial organizations like banks, credit unions, and fintech companies have robust toolkits that are available to them to help combat these sophisticated attacks. Proactively adopting these tools and strategies will help safeguard against the evolving threat of AI-powered fraud.